The challenge

As AI systems entered high-stakes environments (finance, healthcare, infrastructure) there was growing urgency to define what “reliability” meant in practice. A leading initiative sought to create the first shared benchmark for AI risk and reliability, but faced challenges aligning researchers, developers, and industry buyers around measurable criteria and practical use.

Our approach

We led the product strategy from initial concept to a fundable benchmark plan. This included defining key risk dimensions and identifying real-world evaluation methods that could scale across domains. We worked closely with academic and industry stakeholders to prioritize what to measure and why, shaped early-stage pilot designs, and developed a positioning strategy that made the benchmark valuable not just for research, but for procurement and policy.

Outcome

The benchmark moved from concept to roadmap, with aligned stakeholders, scoped tasks, and clear messaging for funders and users. It now forms the foundation for a growing effort to make risk and reliability concrete in AI system selection and regulation.

What it shows

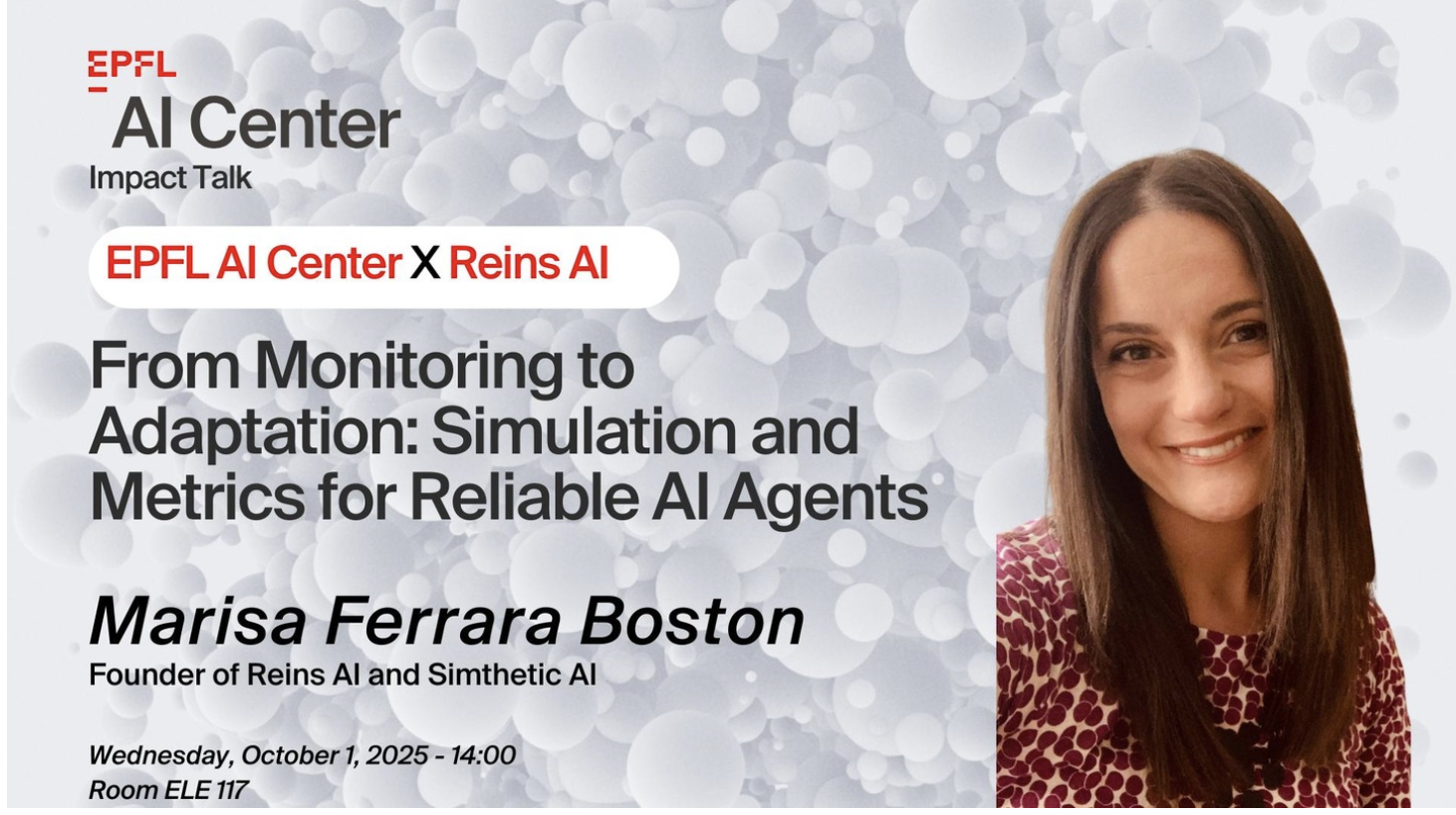

This work demonstrates Reins AI’s ability to lead from ambiguity. We turn broad concerns about AI risk into shared definitions, measurable signals, and evaluation infrastructure that teams can trust and act on.