The challenge

As generative AI entered mainstream tools, a major platform needed to ensure its image generation features could launch safely, align with internal policies, and meet external stakeholder expectations. While technical performance was improving rapidly, there was no shared standard for evaluating safety, appropriateness, or user trust at scale

My role

In my prior role, I led the design and execution of the human feedback and evaluation strategy for this system. I worked across policy, product, and research teams to define success and surface failure modes through targeted evaluations. This included task design, feedback pipeline integration, and analysis of human responses that revealed disagreement patterns and emerging risks.

Outcome

The product launched with a robust evaluation framework in place, supporting not just policy compliance but iterative model improvement. Human feedback became a core part of the system’s development loop, giving internal stakeholders clarity and confidence about readiness.

What it shows

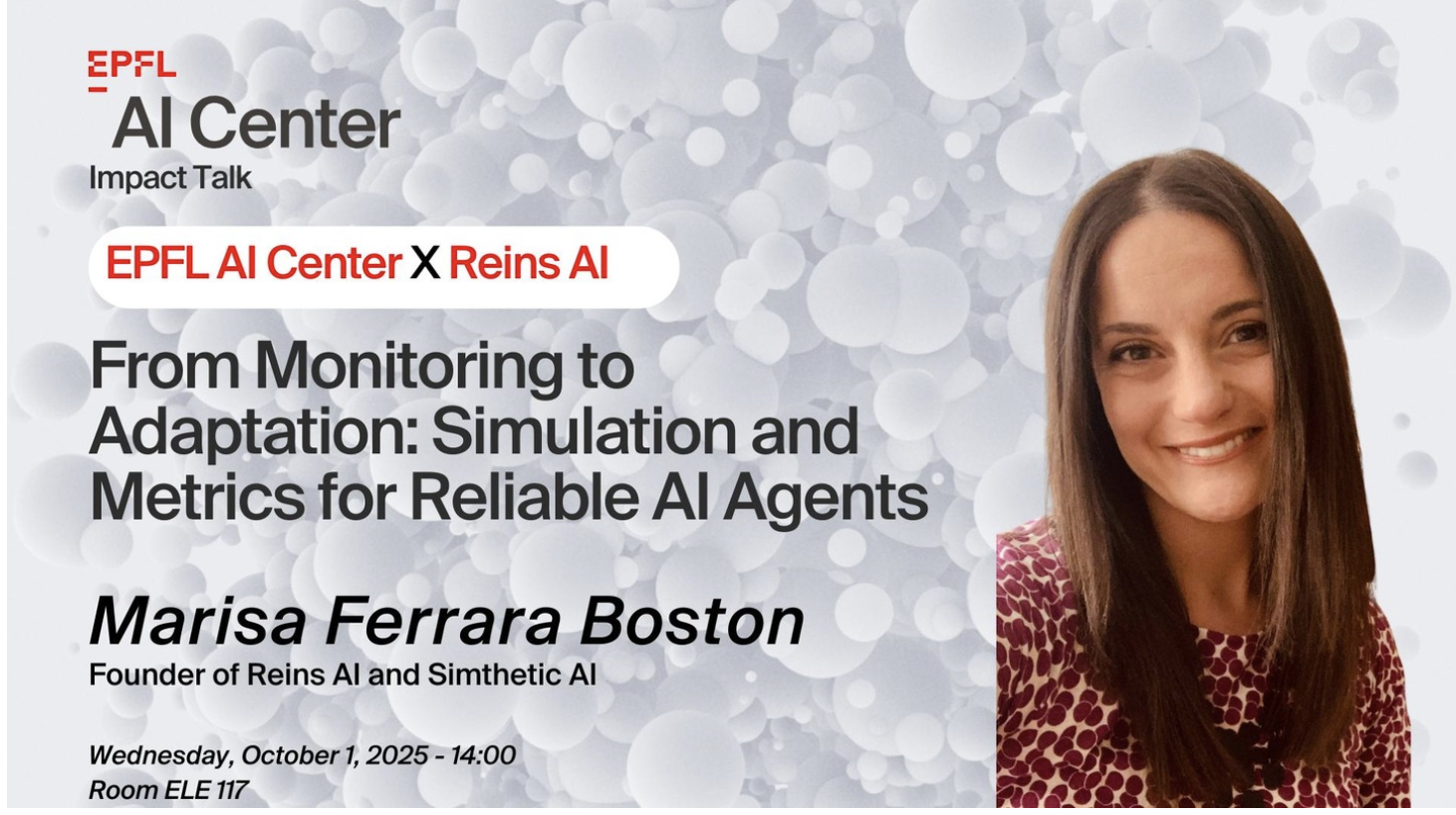

This project illustrates how thoughtful human evaluation can de-risk complex generative AI features. At Reins AI, we now bring these same principles (cross-functional coordination, task design, and decision-aligned feedback) to client systems across domains.